Incrementality Testing: A Solution for Marketing Measurement

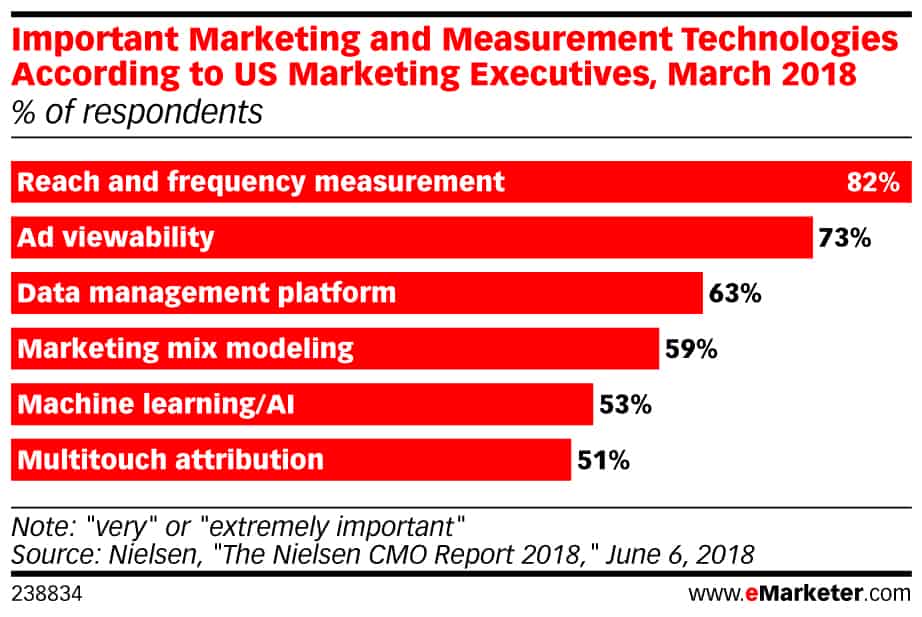

Publishers are always launching new campaign types, optimizations, ad formats, and targeting capabilities. Advertisers have an unprecedented opportunity to convey messages to their audiences. Yet, they face growing pressure to quickly identify the methods that drive business goals. Could incrementality testing be the next big solution to marketing measurement?

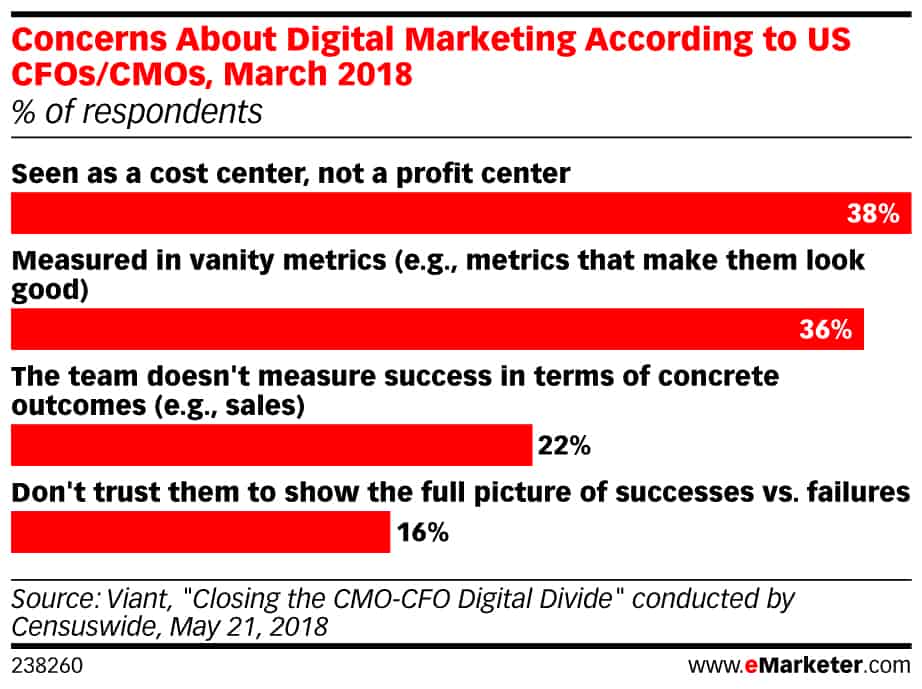

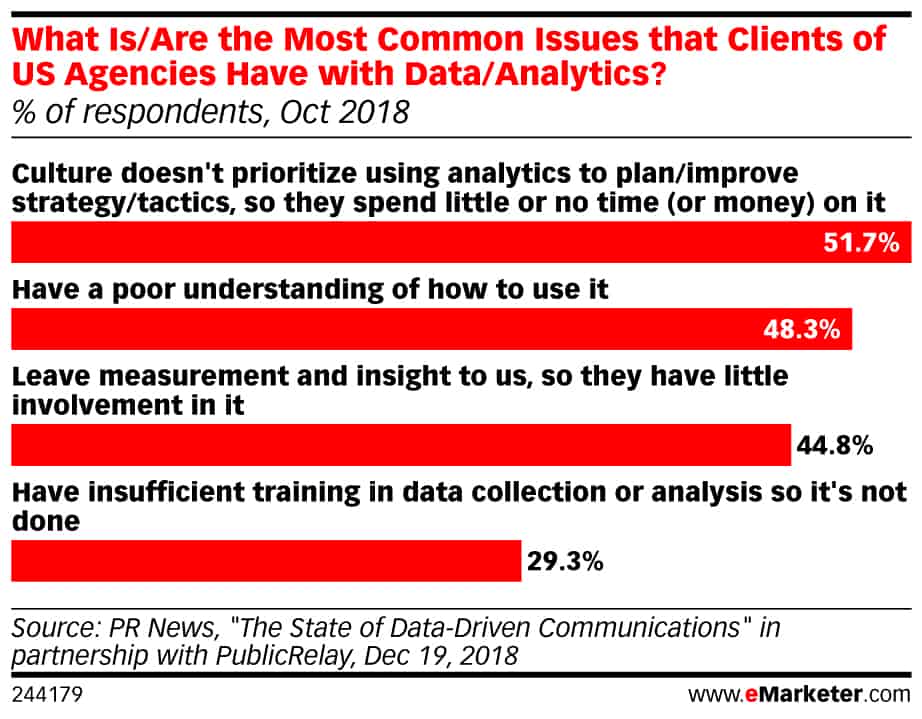

Differing methods of attribution and measurement add complexity. Varying indicators of value and revenue can tell conflicting stories based on sourcing. How can brands make informed, data-driven decisions to forecast the impact of different advertising investments on their business?

While brands seek more precise methods of proving efficacy, incrementality testing has become one of the hottest topics in online advertising. Skai has insights gleaned from over five years worth of incrementality measurement across platforms.

How Best To Measure Efforts?

A prime example of the issue facing marketers is when they have to understand the effect of retargeting strategies. Or how industry-wide buzz around video ads makes marketers vigilant to the revenue driven by their investment in video creation.

Similarly, the rise of mobile advertising has inspired skepticism that many attribution tools, in fact, misconstrue these investments. Advertisers are reasonably tasked with determining the incrementality of new and emerging channels against tried-and-true methods. However, brands remain uncertain of the true value of different targeting strategies, channels, and ad formats.

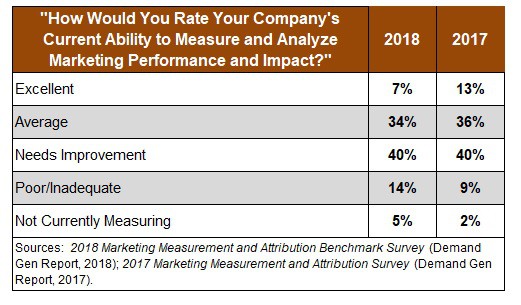

To date, the ad industry has been unable to provide clarity. The problem is exacerbated by the lack of a unifying approach to measurement. Among these challenges are walled-garden data sources, upper-funnel metrics, cross-device approximation, and online vs. offline activity. Marketers often report having trouble measuring the business impacts of specific tactics. As with any measurement modeling, data quality can make or break results.

Find the Business Impact

Incrementality testing in marketing compares the marketing results between a test group and a control group. Using this method, marketers can easily isolate the affected variables. They are able to clearly, assess immediate business impact and formulate data-driven actions to take.

With incrementality testing, advertisers can better understand if the KPIs are a direct result of their own campaigns or extraneous effects. Advanced AI technology helps these tests achieve statistical significance even if they are low volume, small budget campaigns.

Also, a cross-channel testing methodology provides measurement and insights into the indirect effects of the tactic. This is what is generally referred to as the ‘halo effect’. Incrementality testing can easily track business implications using consistent, comparable and coordinated data sources.

Incrementality testing is something that Skai’s data science team has developed over time. Our methodology leveraging best practices for testing adapted to a variety of specific lift-measurement use cases.

Our team consists of data scientists knowledgeable across verticals, with experience scaling incrementality testing across platforms and strategies. If your team faces challenges related to testing, attribution, or measurement, we’re eager to help and would love to connect!

Incrementality Testing: 5 Gaps of Attribution Measurement

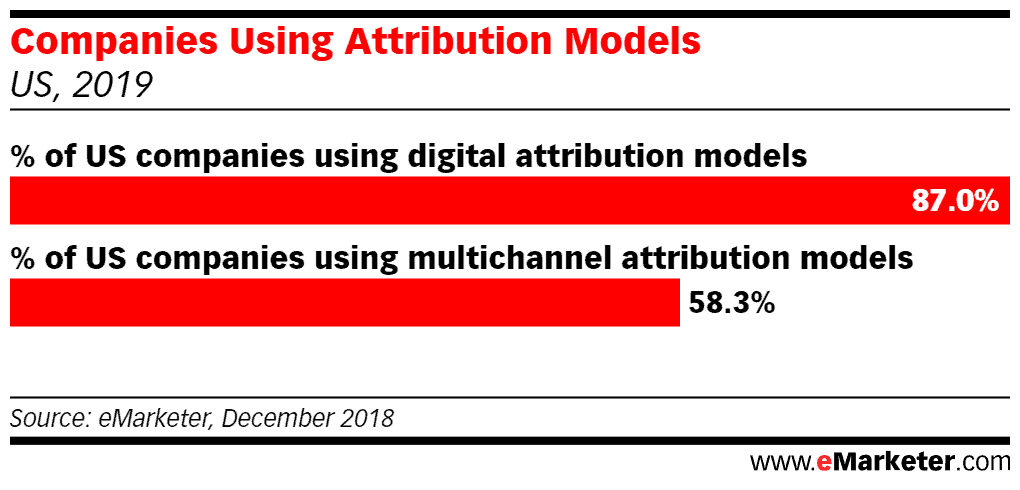

Attribution uses models to measure advertising investments. A huge advantage of attribution modeling is data continuity, meaning that a brand doesn’t need to alter marketing plans mid-stream in order to achieve actionable insights. In fact, a well-formulated model can account for regular fluctuations and realignments. Data can be narrowed down to individual keywords and ads, and even cross-channel consumer journeys.

While attribution has proven a largely scalable solution across verticals, it also poses a new set of reporting challenges.

Incrementality Testing vs Attribution #1 – Attribution is only as good as the data

Advertisers must be able to account for the full consumer journey in order to track ads and their impact. But consumer journeys can be difficult to follow, especially those which occur across multiple channels and devices!

An ideal attribution model identifies individual users across devices and channels, but in cases when this isn’t easily accomplished, data quality is compromised. When the measurement is questionable, modeling is inaccurate at best.

Many advertisers have reason to believe that mobile advertising is, in fact, more effective than their reporting indicates. But it can be undervalued due to insufficient device tracking. Linear measurement often overlooks a large volume of conversions which begin on mobile but finish on desktop devices. This imprecision is particularly evident with mobile-heavy publishers such as Facebook, Snap, Pinterest, and Twitter. Similarly, a lack of sufficient data to measure video impact means that video promotions are regularly under-valued.

In those scenarios, incrementality testing proves particularly valuable by focusing on individual investments. It can directly measure the impact upon overall business results and eliminate the need to identify and measure each step in the consumer journey.

Incrementality testing accomplishes cause-and-effect measurement and takes out the guesswork.

Incrementality Testing vs Attribution #2 – Attribution models are subjective and non-transparent

Any path-to-conversion with multiple steps can create uncertainty regarding how and how much each step contributed to the final purchase. Competing attribution models paint conflicting stories about how each step ties to the end action.

Today, many companies even recruit AI teams to develop in-house attribution models based on machine learning. While this can result in greater efficiency and robust data sets, it leads to even more subjective models.

As advertisers seek the newest and most advanced attribution modeling available, old modeling quickly becomes outdated and redundant. In recent years, the breadth of attribution models available has given data teams additional challenges. Marketers struggle to coordinate disparate reporting, even among individual industries, companies, teams, and time periods.

In instances where attribution’s subjectivity is a cause for concern, incrementality testing lends additional assurance. By testing a specific investment in the customer journey, incrementality testing can measure its direct impact. Incrementality testing can also measure halo effects on the ecosystem of investments without making assumptions.

Incrementality Testing vs Attribution #3 – Attribution is not an island

A key benefit of attribution is that it accounts for ongoing business fluctuations. This can happen during big budget changes, holidays, and special events. This holistic analysis, assessing an array of inputs, leaves room for ambiguity regarding the actual value of individual investments. It tends to mistake correlation with causation. For companies impacted by seasonality, advertisers must determine when growth is resultant of their campaigns or external factors.

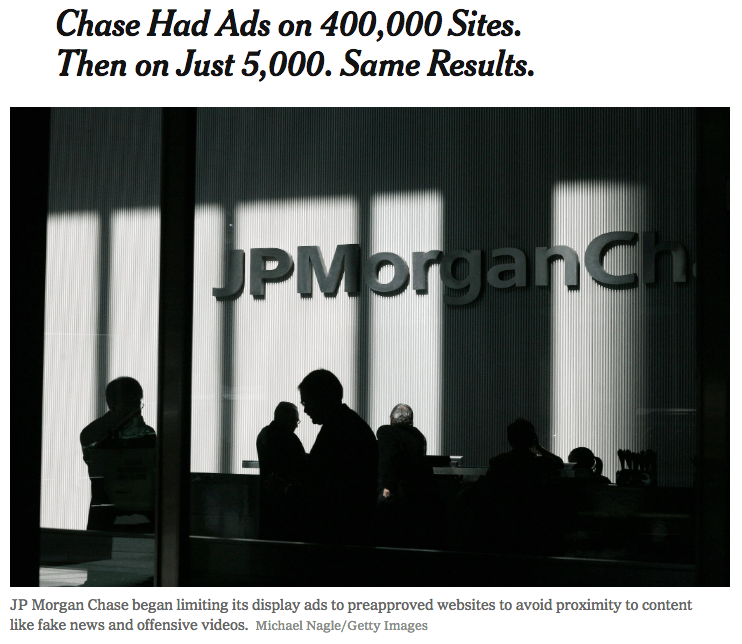

This gap is frequently manifested in upper-funnel actions and initial user interactions. For instance, attribution often credits a high volume of impressions to display ads. In 2017, The New York Times reported that Chase saw almost indistinguishable results between two very different promotions. One served display ads on 400,000 websites and another served ads on only 5,000 sites! That fact that a campaign 400,000 sites could drive the same goals as 5,000 suggests a disconnect in the actual effect of those display ads served.

Incrementality testing in marketing can help combat ambiguity by isolating individual investments and standardizing extraneous parameters. This measurement is agnostic of influences such as seasonality, geography, and cross-marketing.

Incrementality Testing vs Attribution #4 – Attribution falls short with traditional media

Successful attribution requires comprehensive data for engagement and conversion actions. Funnels with offline components—especially traditional formats such as TV, radio, and billboards—are complicated by the inability to measure impressions and engagement. In these cases, extractable statistical insights cannot be conclusively connected to specific consumers. Isolating offline promotions’ true impact is virtually impossible.

This gap often means that conversions are inadequately attributed to offline ads, undermining the effect of offline channels. In this case, incrementality testing enables advertisers to measure the difference between a test group, which has been exposed to the offline ad, and a control group that has not.

Incrementality Testing vs Attribution #5 – Attribution falls short with future investments

Attribution modeling attempts to identify causality between conversion actions and preexisting investments. However, with the addition of new publishers and advertising methods to the consumer funnel, marketers need to consider the value of new investments. Attribution models are historical in nature and therefore lack sufficient data to perform these calculations. Advertisers are left to make investments and measure the impact afterward rather than in advance.

In 2017, Skai launched full support for Pinterest campaigns. While some advertisers adopted immediately, others questioned the value of investing in a new channel. But by using small test budgets, clients were able to quantify the effect of introducing a new platform into their consumer journeys.

Using an incrementality test, Skai clients Belk and iCrossing determined that Pinterest advertising increased their online ROAS 2.9x and in-store ROAS 31.4x!

Incrementality testing can be used to evaluate future investments. It can use small budgets to assess the value of the new investment with statistical confidence.

Conscious of these gaps, advertisers tasked with making decisions based on attribution face even more questions. How can attribution be directly translated to user actions? Can models be adjusted during the course of a promotion? Can advertisers determine which scenarios justify switching models?

We have seen a shift over the last three years in the way marketers evaluate attribution modeling and results. A decade ago marketers considered full funnels measurable and data accurate and actionable. However, today they feel comparably limited, with mobile, in particular, disrupting measurement and necessitating new solutions. Proactive advertisers today will often combine attribution with incrementality testing. Marketers can use incrementality testing to validate attribution measurement and adjust modeling to better measure true ad performance.

Incrementality Testing: Selecting the Right Split

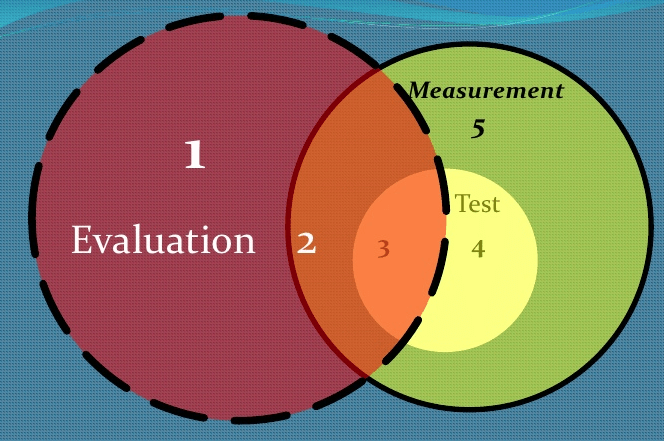

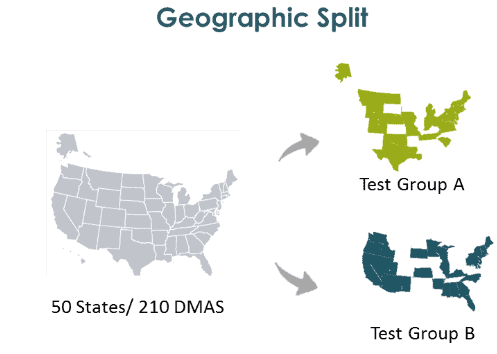

The three stages of an incrementality test are:

- Preparation – Split some part of my addressable market into A & B groups

- Intervention – Expose one of the groups to a new variable allowing enough time for any difference to become apparent

- Measurement – Examine the performance of groups A and B pre- and post-intervention to understand the impact

Incrementality Test vs. A/B Testing: The Crucial Difference

So far, you might be thinking that this all sounds similar to traditional A/B testing. It can test things like subject lines, images, or landing pages to see which variant performs best.

But the truth is, an incrementality test is very different.

In incrementality testing, we measure the impact of the test on business-level metrics such as revenue, new customers, or site visitors. In traditional A/B tests, it’s often about media optimization. We look for the impact on more specific campaign performance metrics such as CTR, attributed conversion rate, etc. However, we cannot rely on attribution to understand the business-level impact. The performance data we use to measure impact in an incrementality test must not rely on attribution.

Measuring impact on business metrics also means we have to be very thoughtful as to how we set up our split test. In an incrementality test, the split into groups A and B should be done in such a way that the intervention performed on one group will have little to no impact on the other group. If not, the results of the test may totally miss or greatly exaggerate the impact of the intervention. In other words, you need a clean split with minimal crossover.

Let’s examine three of the most common split types used in traditional A/B testing. Which, if any, are appropriate for an incrementality test?

- Auction split

- Audience split

- Geo split

For each type of split we’ll evaluate it against the criteria mentioned above as well as more general criteria that are important for all kinds of A/B test splits:

- Ability to measure impact without relying on attribution

- Ability to intervene in one group without impacting the other

- Good correlation between performance metrics in both groups

- The randomness of the split

Auction Split

How it works: An auction-based split randomly assigns a user to group A or B in real-time, i.e. when they are about to be exposed to an ad.

Pros: Theoretically, this allows for a totally random split. This is ideal from a statistical point of view and should lead to a good correlation between the groups.

Cons: An auction-based split has one potential flaw in that the random assignment occurs every auction. Thus, the same user can be exposed to advertising from both groups A & B.

Right for incrementality testing?: NO! This flaw rules out such an approach for any kind of incrementality testing. The probability is high that intervention in one group will have an impact on the other. Furthermore, since there’s no clean separation of users between groups A and B, there’s really no value in looking at performance data without attribution. There’s no way to associate unattributed conversions or revenue with either group A or B.

Audience Split

How it works: An audience split assigns users to groups A and B randomly but reproducibly such that the same user will always be assigned to the same group. This is generally done using hashed cookies or other forms of a user identifier.

Pros: Like an auction-based split, this also creates a very random split of two well-correlated groups.

Cons: There are many limitations when it comes to incrementality testing. First, the split is only as good as your testing technology’s ability to identify unique users, which is trickier in today’s multi-screen, app-filled world. Cookie-based audience splits are likely to assign multiple devices/browsers from the same user to different groups. True audience-based splits are only possible only for publishers who have a high percentage of cross-device logins. In order to measure impact without relying on attribution, you need to be able to assign transactions to either group A or B. This is based on the user identifier, without the necessity of a preceding click or impression.

Facebook is able to make this assignment for transactions recorded by its pixel but it is not transparent. It doesn’t expose the user level audience assignments to allow third-party technologies to evaluate performance. A further weakness of audience-based splits is that they cannot be used for measuring offline impact. For example, in-store or call center, or offline ads such as TV and radio. This is because it’s very difficult to reliably connect online user identifiers to offline transactions.

Right for incrementality testing?: NO! Given the significant amount of drawbacks to this type of testing, this is also not the best approach.

Geo Split

How it works: A geo-based split assigns users to groups using the ability to geo-target in both traditional and digital marketing campaigns. Geo splits generally work at the city or DMA level—cities or DMAs are randomly assigned to groups A and B.

Pros: Geo splits significantly simplify measurement since one can easily look at both online and offline transactions by geo without having to perform attribution. They have the best potential to reduce the chance of intervention on one group influencing the other. A further advantage is that a geo-based split is highly transparent. You can easily evaluate the results of the test against multiple data sources — even those that weren’t considered in the planning of the test. It’s the only approach that allows you to measure halo effects such as the impact of investment in one channel on the revenue attributed to another.

Cons: Geo splits are less random than audience or auction-based splits. But using a split methodology to create balanced and well-correlated groups overcomes this problem.

Right for incrementality testing?: Yes…if done correctly! At Skai, we’ve been using the geo-based approach for A/B testing and incrementality testing for more than four years. We’ve applied machine learning approaches to create our own algorithm that creates geo splits with balanced and well-correlated groups. The geo-based approach can run successful tests that yield meaningful results and stand up to analytical scrutiny.

While this is certainly not an exhaustive list of splits, you won’t find another type that lends itself as well to an incrementality test as a geo split does.

What to Do Next?

Interested in learning more about how Skai can help you better test, execute, and orchestrate your digital marketing efforts? Contact us today to set up a discussion